Follow up post from Hashing example applied in database partitioning

Why do we need consistent hashing?

To address drawbacks with static hashing technique, to list a few,

- tight coupling with number of nodes in the distributed system makes it cumbersome to dynamically add/remove nodes

- adding/removing nodes makes us change the hash function and re-distribute load on new number of nodes by recalculating hash for all data/requests

- this introduces a downtime until this migration is complete and causes a hit on system availability, which is not what we want in a distributed system

- if a node goes down, it causes uneven distribution of load on remaining nodes

How does consistent hashing help

Consistent hashing solves the problem of rehashing by providing a distribution scheme which does not directly depend on the number of servers.

Consistent Hashing is a distributed hashing scheme that operates independently of the number of servers or objects in a distributed hash table by assigning them a position on an abstract circle, or hash ring. This allows servers and objects to scale without affecting the overall system.

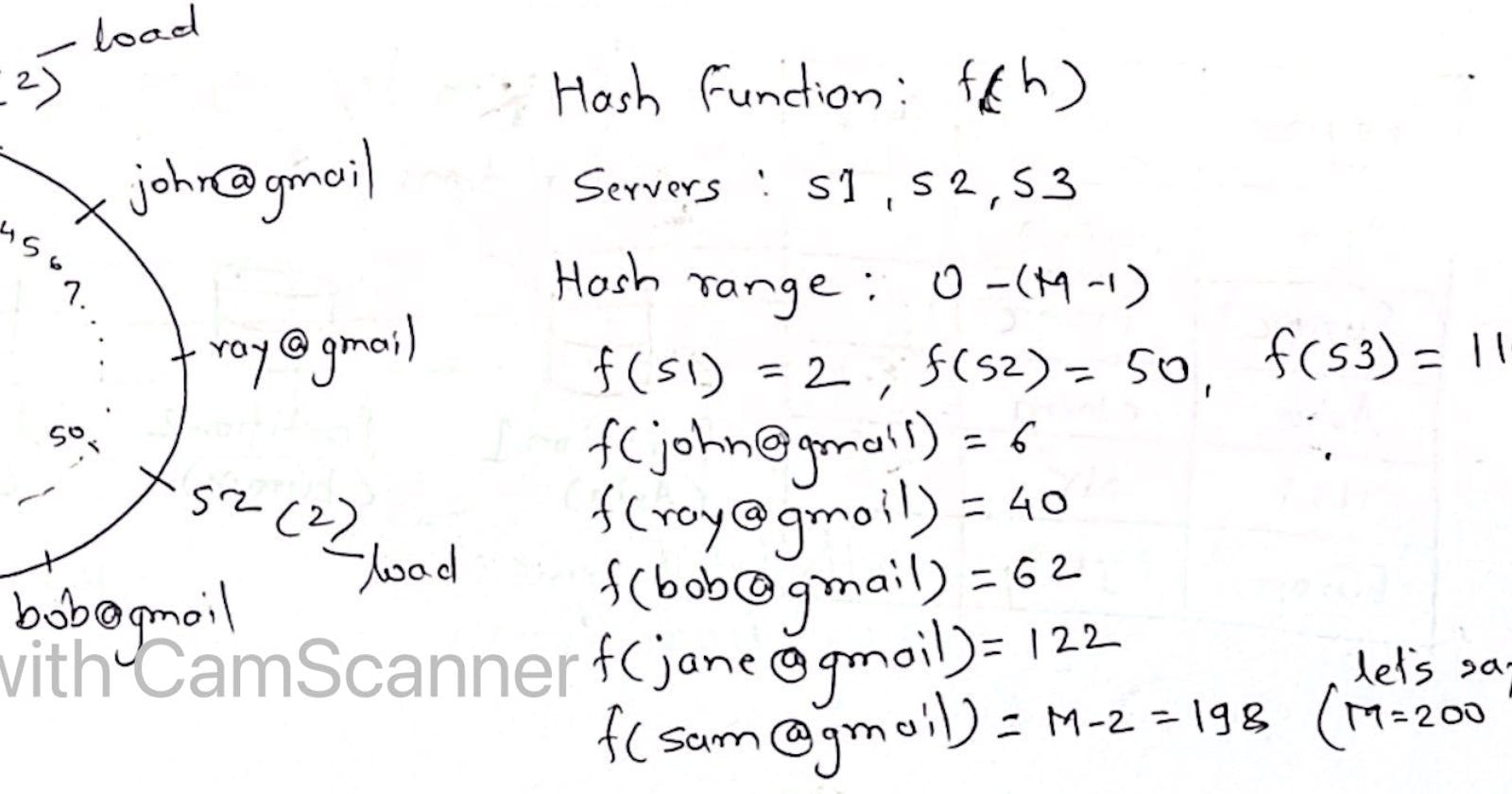

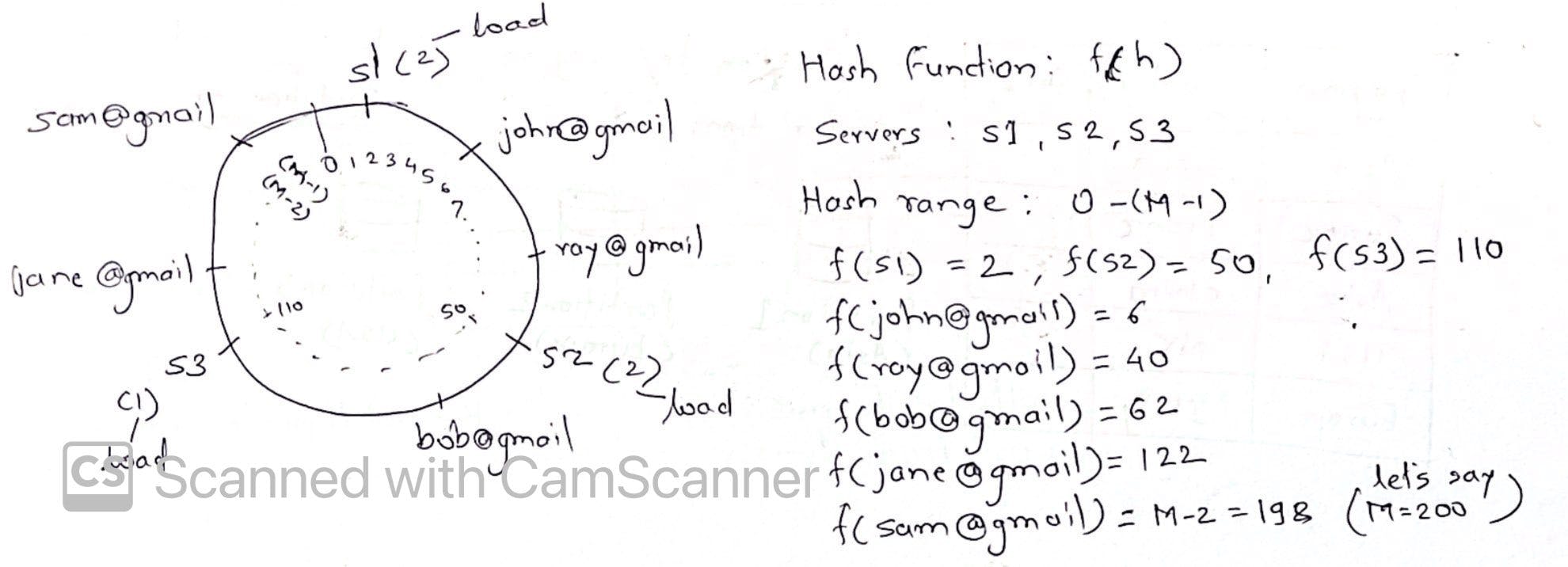

Let's take an example of consistent hashing:

- we have a distributed system serving requests from users

- we use the email addresses as partition key to distribute the load on available servers

- servers are arranged in circular fashion on a hash ring

- we use same hash function f(h) to calculate hash for servers as well as incoming requests to place each on the hash ring

- s1 handle requests from 2 users, s2 doing the same, s2 handling requests from only 1 user

- let's assume s1 goes down, the requests handled by s1 will be handled by s2. There is no need of rehashing and s3 remains unchanged

- this avoids rehashing but implementation is half baked which causes uneven load (s2 processing 4 requests vs s3 processing only 1)

Better implementation of consistent hashing

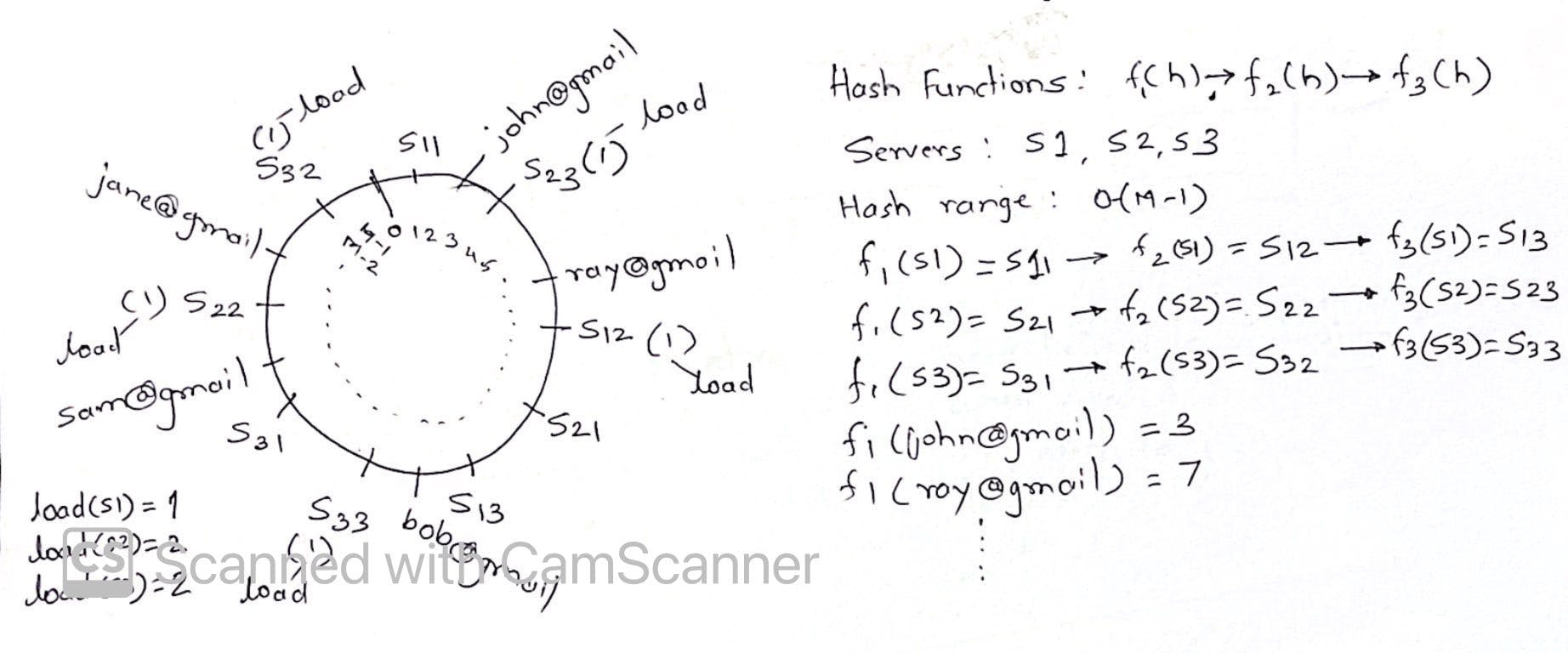

Let's extend above example and improve the placement of servers on hash ring:

- instead of using one hash function, we apply 3 different hash functions to place the nodes at multiple places on the hash ring. f1(h) -> f2(h) -> f3(h)

- These become the virtual nodes on hash ring, underlying its just one server placed virtually on hash ring making s1 as s11,s12,s13 (due to 3 hash functions) and respectively for s2,s3

- we use only f1(h) to calculate hash for users to place on hash ring similar to first approach

- s1 handle requests from only 1 user, s2 and s3 handling requests from 2 users each

- let's assume s2 goes down, so s21, s22, s23 go down

- requests from s22 will be handled by s32, requests from s23 will be handled by s12

- s1 now handles 2 requests and s3 handles 3 requests, creating even load better than what we saw earlier without virtual nodes