This article covers the most important and distinctive aspects of different concepts in multithreading. Each of the section deserves its own deep dive and there are pages written in reference books on them. Aim is to superficially cover these concepts and provide github references wherever possible

Challenges in Multi-threading

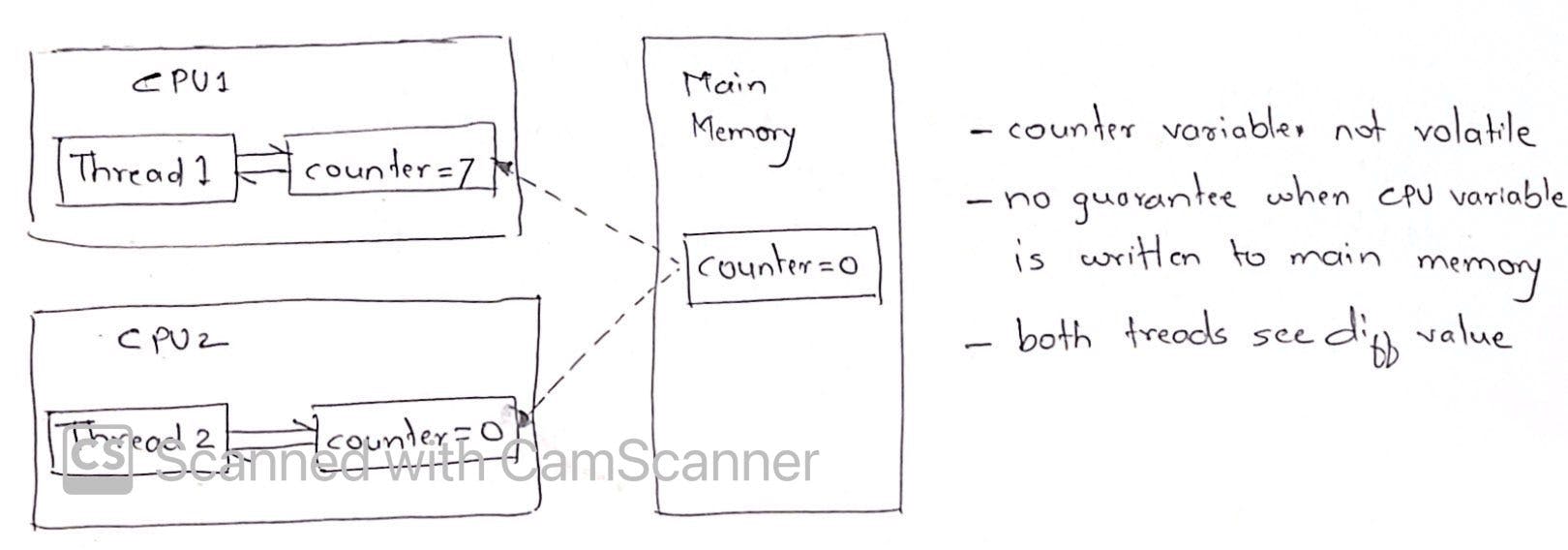

- Visibility problem - if two threads work on two different cores, variable updated by Thread2 does not reflect to Thread1 because it is stored in local CPU cache

addressed by likes of volatile variable

- Synchronization problem - variables which are shared between inter-dependent threads (counter variable) behave unpredictably or a critical resource (DB connection) gets exhausted due to concurrent access above capacity, these cases need synchronization to make them predictable or enforce controlled access

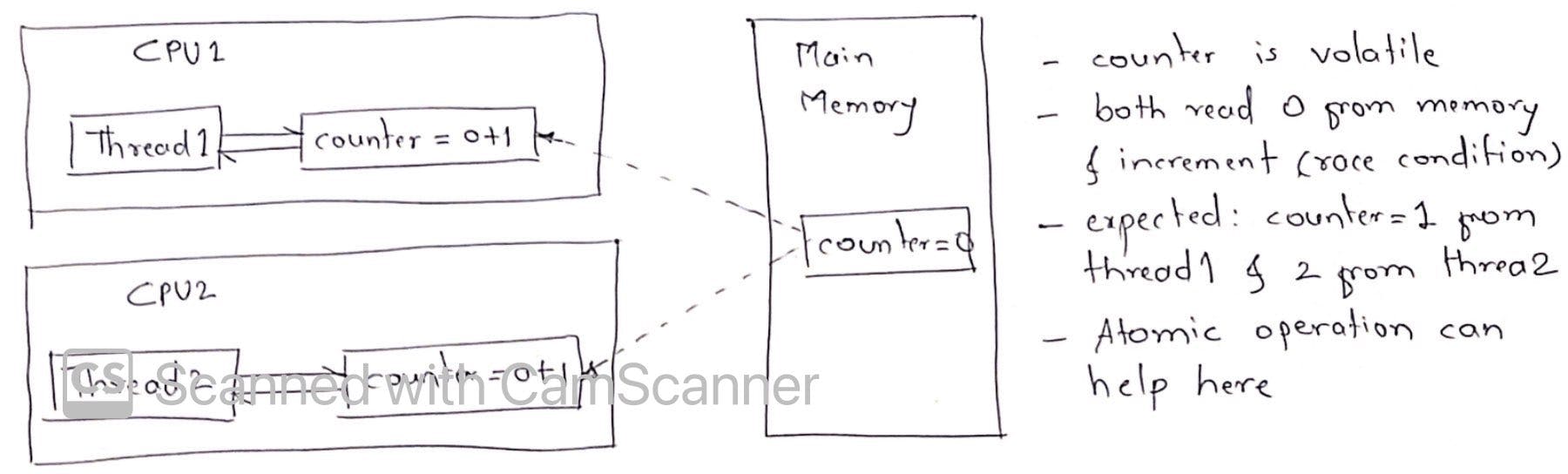

addressed by likes of synchronized keyword or Atomic operations

Atomic variable

Why do we need atomic variables? Imagine a variable int count, getting incremented 5 times by 2 threads in parallel. It should have 10 as final value but it always varies between 5/6/7/8. We need atomic variables to make sure this doesn't happen.

How atomic variable can help? In above example, increment is 3 step machine level instructions operation (read, increment, set). Atomic variable uses compare-and-swap (CAS) algorithm which translates increment to 1 step machine level instruction. So when a tread increments the value, it's all or nothing operation.

A typical CAS operation works on three operands:

- The memory location on which to operate (M)

- The existing expected value (A) of the variable

- The new value (B) which needs to be set

- The CAS operation updates atomically the value in M to B, but only if the existing value in M matches A, otherwise no action is taken.

Why to use atomic when we have synchronized and volatile?

- Synchronized has a performance hit to lock and release resources so its a bit slower and blocking in nature causing probably deadlocks or livelocks. Atomic operations are non blocking and don't have these problems.

- Volatile variables addresses visibility problem but doesn't guarantee thread safety and synchronization

Volatile variable

How does volatile work?

Volatile variable addresses visibility problem in multithreading world where read/write to a variable from ThreadA are not always visible to ThreadB.

Marking a variable volatile means always read/write that variable from/to main memory and not from thread specific CPU cache, as illustrated below

Volatile is not always enough

Specially when variable needs to read the value first from main memory and generate new value based on that doesn't guarantee correct visibility creating race condition. Consider above example with race condition

This looks nice, should we make all variables volatile then?

This would not be a wise thing to do for various reasons, performance being primary. Accessing data from main memory is expensive as compared to CPU cache and will cause performance deterioration if all variables are in main memory.

Further reading

- full volatile visibility guarantee

- happens-before volatile guarantee

ThreadLocal variable

ThreadLocal is exactly opposite to volatile variable. Each thread gets its own copy of that variable and data is never shared across threads. So this rather enforces visibility of variables to each thread.

ThreadLocal<Integer> intVal = new ThreadLocal<>();

How does ThreadLocal works? To put it simply, ThreadLocal put data of the variable inside a map (ThreadLocalMap) with Thread as the key (weak reference). So value is retrieved from map based on which thread is calling it.

Important consideration while using ThreadLocal and ThreadPools

- while using ThreadPool, thread returns to pool once job is done

- when same thread is borrowed again from pool, it might have previous threadlocal data, if that is not explicitly cleaned up

- we can avoid this by manually cleaning the threadlocal once done but that is error-prone and requires rigorous code reviews

- we can also use ThreadPoolExecutor's beforeExecute() and afterExecute() methods to do the cleanups

ThreadLocal and Memory Leak

In web server and application servers like Tomcat or WebLogic, web-app is loaded by a different ClassLoader than which is used by Server itself. This ClassLoader loads and unloads classes from web applications. Web servers also maintain ThreadPool, which is a collection of the worker thread, to server HTTP requests. Now if one of the Servlets or any other Java class from web application creates a ThreadLocal variable during request processing and doesn't remove it after that, copy of that Object will remain with worker Thread and since life-span of worker Thread is more than web app itself, it will prevent the object and ClassLoader, which uploaded the web app, from being garbage collected. This will create a memory leak in Server.

Read more: javarevisited.blogspot.com/2013/01/threadlo..

Mutex

What is a Mutex? Limit access to a critical resource, in multithreading environment, to only one thread at a time. Mutex is the simplest form of synchronizer.

Example:

public class SequenceGenerator{

private int count = 0;

public int getSequence(){ return count++; }

}

How to implement Mutex? there are more ways to do this using third party libraries like Guava (monitor), covering basic ways here

- using synchronized method

public synchronized int getSequence(){ return count++; } - using synchronized block

public Object mutex = new Object(); public int getSequence(){ public int getSequence(){ synchronized(mutex){ return count++; } } } - using ReentrantLock

private ReentrantLock mutex = new ReentrantLock(); public int getSequence(){ try{ mutex.lock(); return count++; } finally{ mutex.unlock(); } } - using Semaphore as explained below, setting limit to 1

TODO: insert github references

Semaphore

used to limit number of concurrent connections to a resource

class SemaphoreTest{

private Semaphore semaphore;

public SemaphoreTest(int limit){

semaphore = new Semaphore(limit);

}

// acquire permits or blocks until available

public boolean getResourceBlocking(){

return semaphore.acquire();

}

// try to acquire permit, returns false if not available instead of blocking

public boolean getResourceNonBlocking(){

return semaphore.tryAcquire();

}

// releases the lock/permit

public void releaseResource(){

semaphore.release();

}

// returns available slots

public int availablePermits(){

return semaphore.availablePermits();

}

}

TODO: insert github reference

Apache commons has TimedSemaphore which releases all locks after specified timeout initialized as new TimedSemaphore(long period, TimeUnit.SECONDS, limit);